AI will replace all coding

The end of coding is near

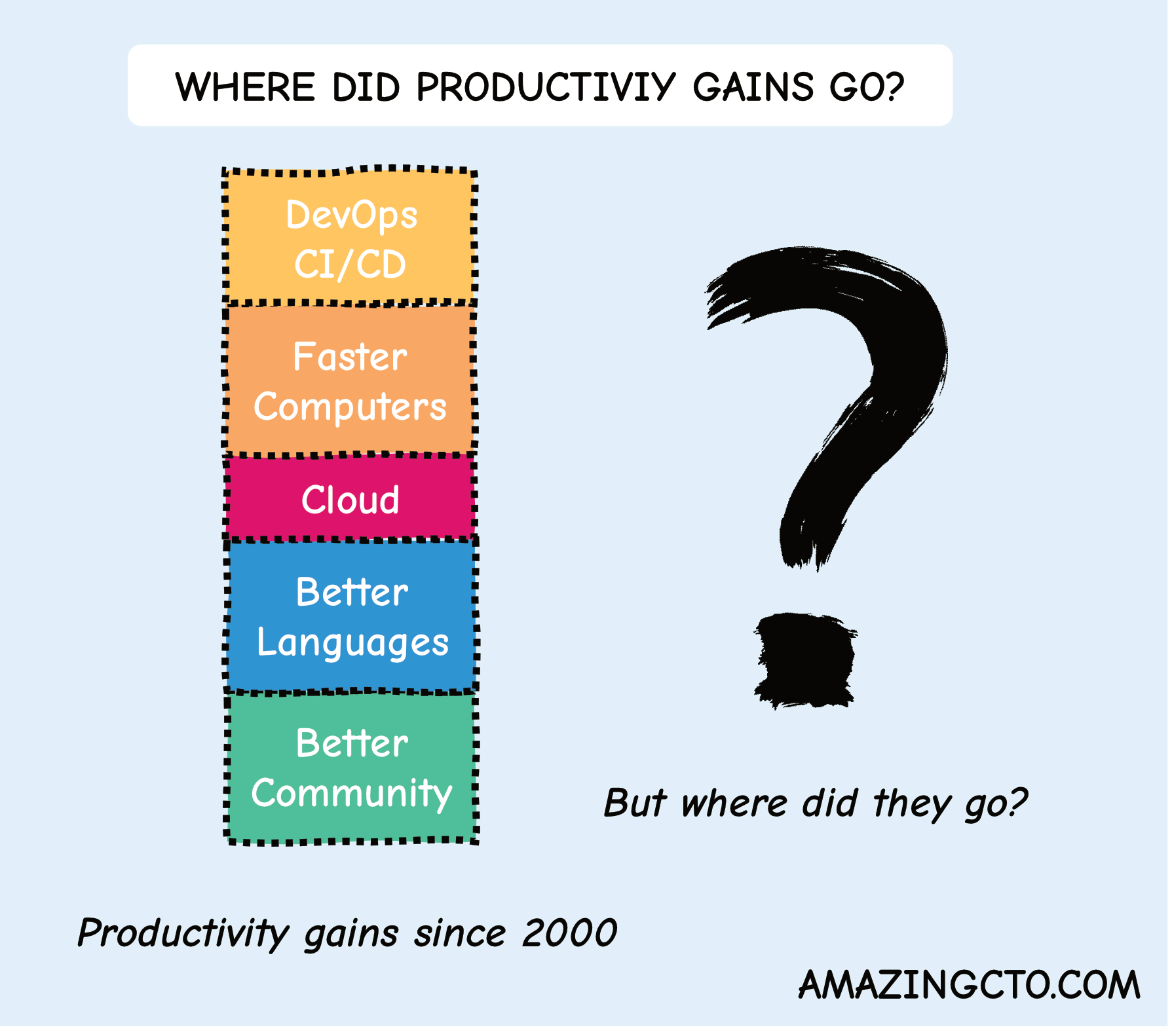

I grew up writing Z80 assembler code during the 8bit era. I wrote some 8bit games. I wrote assembler 68000 during the Amiga demo scene days. I wrote IRC bots in C with the arrival of the internet on a VAX. I wrote HTML by hand on a DEC Alpha when the web was invented. I wrote CGI Perl scripts. I rode the Java hype. I founded some startups. I created successful open source projects. I dived into XP. I became a certified Scrum Master. I believed functional programming was the next big thing. I fought a Rusts borrow-checker on the shoulder of Orion. I love Go.

Now this.

I realized, AI will take away all coding. All of it. Everything I loved. Sure, some people will keep writing code, like some people today use mechanical typewriters. Or write Fortran. Like the people writing new games for 8bit computers today. They exist, but it’s a niche. Most of what happens in computers in the future will not be code, but executed, self modifying, self optimizing, AI models. Coding was just a phase. Like steam engines.

Developers think, “Fine then I can spend more time on the things I want to build”.

This is not how AI will work. It’s not I want something to build, the AI will just do the things. There will no longer be things to “build.” We will no longer think of code as something that exists, but things that just happen. Like magic.

Developers might think that the AI will write parts—the boring parts—of a CRM system. There is no CRM system—just like there is no spoon. You will tell the AI what problem to solve, and it will solve it. Later it will solve it on its own, on its own initiative, without any human involvement.

You will say “But I could tell the AI what film to create, and some scenes, and a rough story, and it will create that film” - but what if AI creates much better, more powerful, exciting, better films than those you could imagine? Films no human ever thought about? Films that are suspenseful and make you laugh and make you cry?

Again, like the typewriter, some people will tell the AI fragments of a story to create a film. Their film. And there will be some stars and some hype around this. A novelty. But most media content will be created by AI, for consumption, on the initiative of AIs, not on the initiative of humans.

Too far-fetched?

If anything, people have been too pessimistic about the speed of change that technology brings, see mobile phones, cars, the internet, etc. Assumptions and predictions in mainstream tended to be: Limited Impact. A hype. Will go away. This is because people resist change. Examples are the estimation on the demand for computers (The famous “I think there is a world market for maybe five computers.”), the demand for cars, the internet (“I predict the Internet will soon go spectacularly supernova and in 1996 catastrophically collapse.”, inventor of Ethernet - dotcom startups blew, but not the internet, the internet just grew and grew and grew), the demand for mobile phones (AT&T estimated peak demand of 900.000 mobile phones - today Apple alone sells that many phones a day!). The only people probably overestimating impact where Sci-Fi writers (70s kid and still not living on the moon!).

In the mid 90s I wrote a philosophy paper at university about an AI that generates random images (triggered by my first digital camera, an Olympus C-800L) and then interprets them (making some estimations on the speed it could do that, generation and interpretation) and how many pictures it “could see”. What we today call Generative AI (though a very bad one). That AI has basically seen it all, an alien killing JFK, me on the moon, you and me drinking a beer together, and things we could never imagine. Like the AI I mentioned above that creates films on its own. Films no one could ever think of.

If we want to predict the future, it might help looking at how technology evolves and what are the drivers for its progression. What drives technology forward? And why?

My model of technical progress

- Progress is driven by (Drivers): cost and convenience

- Progress is limited by (Inhibitors): technical capabilities—going to the moon in 1800

- Progress is stopped by (Preventors): laws of physics—no FTL :-(

When we take this model and evaluate AI with it, we get:

Drivers: Costs and convenience:

- Very high, no technology is higher on both cost savings and convenience

Inhibitors: Technical capabilities:

- No limits I can see over the next 50 years. Some people quote energy, but the fall in prices for solar panels will make us think of ways to get rid of energy in the future, there will be too much.

Preventors: Laws of Physics:

- Not sure what laws of physics or other preventors exist for AI to progress

Still, this looks abstract. HOW does coding change over the next years? Perhaps the grand vision is too difficult to come up with. It gets easier when we break down the collapse of coding into some Milestones.

Milestones Mainstream Near Future (~ 5 Years):

- Write and update all tests (Unit, E2E)—the end of QA

- Code reviews, fixing code on check-in, according to some training

- Bug fixing, reading a bug report, finding the problem, writing a fix

- Fixing incidents, fixing code errors that led to the incident

Milestones Mainstream ~10 years:

- Data warehousing, replacing all data infrastructures with data lakes and LLMs

- Running, scaling and fixing infrastructure, removing the need for all DevOps

- Zero Problem Technology migrations (e.g., Django to NestJS)

- Generating all frontend code from Figma (not only for new UIs but changing code by AIs)

- AI deciding about what features are necessary for the market and how they look like (reducing the PM role)

- AI creating mobile apps from web app and vice versa

- Merging of the Dev and PM roles into a ProductDev (PD) role

- New role of an AI model developer (beside the PD), like the rise of Mobile Developer

Future (~20 years):

- Replacement of software like CMS, CRM, Social Media, etc. with general AI blob

Far Future (~50 years):

- General AI running things (see Banks Culture Universe)

- AIs making major decisions

- Companies—if still existing - run only by AI, no people involved

So here you have it, AI will blow away all coding. Reading my arguments, and still not convinced? Or already looking for a new job?